The onslaught of media in the social media era has made it ever-more challenging to know what news to trust. The phrase “fake news” has become a part of common parlance as fabricated stories stating false or misleading claims spread misinformation to thousands, even millions, of readers each day.

This has created a major problem for traditional fact-checkers like Snopes or Politifact, which simply cannot keep up with the massive amounts of news stories circulated around the internet.

These fact-checkers have typically operated by debunking or verifying specific claims made by news outlets or prominent individuals. Because information spreads so quickly these days, this method has proven to be ineffective.

A fake news story may be seen by thousands of people before a fact-checker has a chance to correct it. The damage is already done by then.

Now, a team of researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) and the Qatar Computing Research Institute (QCRI) is offering a new approach.

The researchers have developed a machine learning system that fact-checks not the story but the news source. By analyzing the text of hundreds of articles published by a given news outlet, the system creates a grade for the source based on its accuracy of reporting and bias.

“If a website has published fake news before, there’s a good chance they’ll do it again,” Ramy Baly, a postdoctoral associate at MIT and the lead author on a paper about the system, said in a statement. “By automatically scraping data about these sites, the hope is that our system can help figure out which ones are likely to do it in the first place.”

It is not the first machine learning fact-checking system, but, until now, most of the research has gone into identifying individual stories, rather than the news sources as a whole.

The researchers suggest that their more broad-based approach will be a more cost-effective and efficient tool for both fact-checkers and readers.

“We cannot fact-check every single news story. This is too costly,” said Preslav Nakov, a senior scientist at QCRI and a co-author of the study. “It is much more feasible to check the news outlets. Also, checking a news story takes time, while we can check sources in advance.”

The system uses a variety of information sources to determine a publication’s trustworthiness, Nakov said.

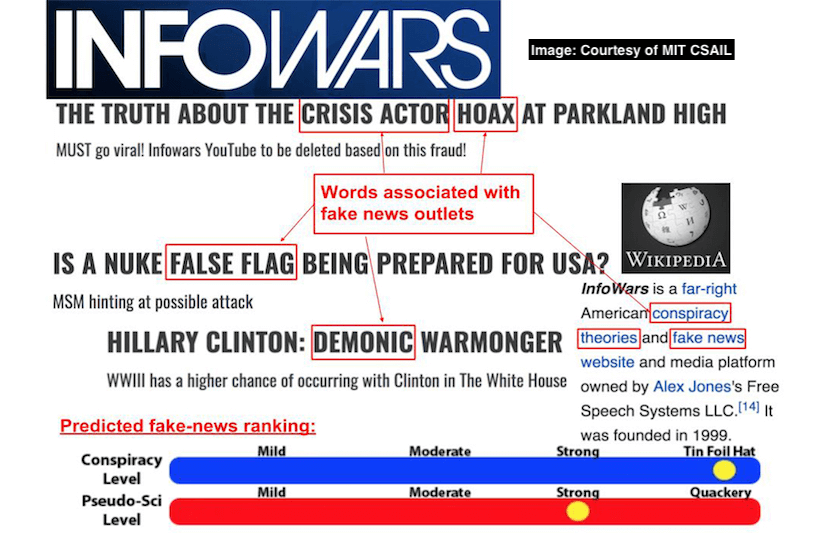

It analyzes the text of a source’s articles. The system looks at the style, subjectivity, sentiment and vocabulary richness, among other characteristics.

More emotional or hyperbolic language tends to be a sign of fake news. Keywords and tone can reveal bias; left-leaning publications focus on moral concepts like care, harm, fairness and reciprocity, while right-leaning publications focus on concepts like loyalty, authority and sanctity.

It only requires about 150 articles to reliably determine how trustworthy a news source is.

The system also looks at the Wikipedia page of a news outlet. The Wikipedia page is assessed based on length and the substance of the text. It searches for target words and phrases like “extreme” or “conspiracy theory” that can help determine the site’s credibility.

Likewise, the system examines a site’s Twitter presence. If there is an account, it looks at how old the account is, how many followers it has, whether it is verified, what is written in the text, and other notable information.

Lastly, it looks at certain website features such as the URL structure and web traffic statistics.

Each of these features helps to build a complete picture of the website’s bias and credibility.

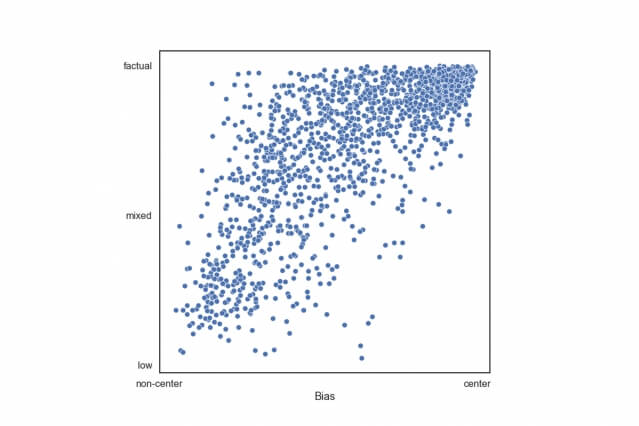

This information, along with data from Media Bias/Fact Check (MBFC), a fact-checking website run by human fact-checkers who report on the accuracy and bias of over 2,000 news sites, is fed into a machine learning algorithm called a Support Vector Machine (SVM) classifier.

The SVM is programmed to classify news sites by factuality and bias. Using this set of information, it can identify factuality with 65 percent accuracy and determine bias with 70 percent accuracy.

The researchers have already adapted the system to other languages. They have tested left-right bias on 100 news outlets in nine languages: Arabic, Hungarian, Italian, Turkish, Greek, French, German, Danish and Macedonian.

“We have developed a language-independent model for that, which is slightly worse than the English one, but not by much,” said Nakov.

They are also working on adapting the system to determine bias along different ideological spectrums. As of now, it is only capable of determining left-right bias.

Nakov noted that these divisions are not universal.

“There are reversed notions in Eastern Europe, where left is conservative and right is liberal,” he said. “In other parts of the world, other divisions might make more sense, such as religious versus secular in the Arab world. Or maybe pro-West versus anti-West in some countries. Or pro-Russia versus anti-Russia.”

The researchers said that the system is not intended to function as the be-all and end-all of fact-checking. It could be used in conjunction with traditional fact-checking and machine learning systems that focus on article accuracy.

The team is currently developing a fact-checking news aggregator that compiles news stories from various sources and rates them according to factuality, left-right ideology, propaganda and hyper-partisanship.

QCRI also has plans to develop a news aggregator app that exposes users to different points of view by presenting them with a collection of articles that span the political spectrum.

Together with traditional fact-checkers, this system and others like it could help a reader understand a story’s credibility before reading or sharing it.

“It’s interesting to think about new ways to present the news to people,” Nakov said in a statement. “Tools like this could help people give a bit more thought to issues and explore other perspectives that they might not have otherwise considered.”