From autonomous vehicles to emergency response robots, machine-learning artificial intelligence is quickly entering our daily lives.

AI is developing at impressive rates, and continues to aid in the improvement of medicine, engineering, robotics and entertainment.

Each day scientists around the world are developing innovative new technology — ranging in diversity from flexible robots to algorithms that can detect animal behavior — and women are often at the forefront of such work.

In this article, we highlight 11 women who are “killin’ it” in AI.

[divider]

Dina Katabi

Andrew & Erna Viterbi Professor of Electrical Engineering and Computer Science, MIT

In a groundbreaking new project, Dina Katabi and a team of researchers at MIT have developed a computerized system, dubbed “RF-Pose,” that uses AI to see people through walls.

RF-Pose works by using a neural network to analyze radio frequencies that reverberate off people’s bodies. Since AI learns by example, the researchers taught the machine to associate particular radio signals with specific human actions.

The researchers collected thousands of images of people in activities like walking, talking, standing, sitting, and opening doors and elevators, and used the images to create stick figures posing in the same manner.

They then paired these stick figure poses with corresponding radio signals and showed them to the AI. This allowed the system to detect people’s postures and movement in real time, even from behind walls or in the dark.

Katabi believes the technology can be used for medical purposes, allowing doctors to observe disease progression in Parkinson’s, multiple sclerosis and muscular dystrophy.

“Estimating the human pose is an important task in computer vision with applications in surveillance, activity recognition, gaming, etc.,” Katabi told TUN.

[divider]

Rebecca Kramer-Bottiglio

Assistant Professor of Mechanical Engineering & Materials Science, Yale University

Imagine a flexible robot that could be reprogrammed to perform countless tasks. Better yet, a device that could be used to transform any old useless, inanimate object — your favorite stuffed animal, for example — into a fully functional robot.

Though it may sound like science fiction, Rebecca Kramer-Bottiglio and a group of Yale University researchers are making this a reality.

The research team has developed a programmable elastic material called “OmniSkins” that can be used to make a multipurpose robot on the fly.

These “robotic skins” are composed of elastic sheets embedded with sensors and actuators. They come in different shapes and sizes, and are modular, which means that they can be combined and arranged in various ways to fit different objects and perform different functions.

Instead of being designed to perform a specific task, they are designed with versatility in mind. The idea is for the user to reprogram them to perform whatever task is required at the time, like a robotic Swiss army knife.

“We can take skins and wrap them around one object to perform a task — locomotion, for example — and then take them off and put them on a different object to perform a different task, such as grasping and moving an object,” Kramer-Bottiglio said in a statement.

“We can then take those same skins off that object and put them on a shirt to make an active wearable device.”

[divider]

Monica Emelko

Professor of Civil & Environmental Engineering, University of Waterloo, Canada

Every year, due to many factors like the rising temperature and overuse of agricultural fertilizers, water sources around the country are increasingly being covered in musty green thick layers of toxic algae, threatening families and wildlife.

But with the help of Monica Emelko and a team of researchers at the University of Waterloo, AI can help guard our water from toxins.

“It’s critical to have running water, even if we have to boil it, for basic hygiene,” Emelko said in a statement. “If you don’t have running water, people start to get sick.”

The researchers developed an AI system that uses software in combination with a microscope to inexpensively and automatically analyze water samples for algae cells in about one to two hours, including confirmation of results by a human analyst.

Instead of the current method that can only analyze a small area looking at just a couple of microorganisms at a time, this system identifies and counts millions of microorganisms from larger water sample volumes in a matter of seconds, using a standard microscope.

“The ability to do all this automatically in a matter of seconds can enable water facilities to perform screenings directly on-site in a frequent and rapid manner to keep water safe,” co-author Alexander Wong told TUN.

[divider]

Sabrina Hoppe

Doctorate Student, University of Stuttgart, Germany

&

Stephanie A. Morey

Doctorate Student, Flinders University, Australia

Sabrina Hoppe and Stephanie A. Morey, working alongside researchers at the University of South Australia, helped to create a machine-learning algorithm that can predict human personality traits by tracking eye movement.

The AI technology can analyze a person’s eye movements and recognize four of the five big personality traits: neuroticism, extraversion, agreeableness and conscientiousness.

To test the algorithm, the researchers recruited 42 participants and fitted them with a 60 Hz head-mounted video-based eye tracker that recorded gaze data and high resolution video while they performed daily tasks around a college campus.

Then, the researchers compared the gaze data with personality traits by giving the participants three established self-reporting questionnaires, and found that people’s eye movements can reveal whether they are social, conscientious, or curious.

The software opens up the possibility of one day developing robots that are in tune with human signals and socialization.

“Human-machine interactions are currently unnatural. The ATM, computer, phone don’t adapt to our mood or the current situational context,” Tobias Loetscher, a senior lecturer in the School of Psychology, Social Work and Social Policy at the University of South Australia, told TUN.

“It doesn’t matter whether I’m happy, angry, confused, ironic, irritated – the computer is not empathic and not adapting to my situation. If we manage to provide computers with the ability to sense and understand human social signals, the interactions will become more natural and pleasant.”

[divider]

Shuting Han

Graduate Student, Columbia University

Animal behavior has been the subject of scientific research since the days of Aristotle, but until now, studies in this field have been restricted to hours of scrupulous observation and note-taking.

But with the help of Shuting Han and her team at Columbia University, AI can now be used to study animal behavior both quickly and effectively.

The researchers have developed an innovative algorithm that can successfully analyze hours of video of Hydra, a miniscule freshwater invertebrate, and understand its full range of behaviors.

By filtering out spam information, the algorithm is capable of detecting tendencies in the animal’s behavior.

Working as a “bag-of-words” algorithm, a popular kind of algorithm often used in filtering email spam, the algorithm learns to classify different visual patterns — shapes and motions — in videos of Hydra and pick out repetitive movements.

In doing so, the technology identifies the different behavioral patterns of the animal.

“Although we used our approach to construct the complete behavior map of Hydra, our method also provides a general framework for behavior recognition of deformable animals, and is potentially applicable to all animal species,” Han told TUN.

“In fact, combined with the complete neural activity map of an animal, our study opens the possibility of decoding the neural code for all behaviors in an animal, and could enable potential breakthroughs in neuroscience, evolutionary biology, artificial intelligence and machine learning.”

[divider]

Joni Holmes

Head of the Centre for Attention Learning and Memory (CALM), Medical Research Council’s Cognition and Brain Sciences Unit, University of Cambridge, UK

Joni Holmes, alongside fellow researchers at the University of Cambridge, used AI to identify clusters of learning difficulties in children.

The researchers used data from hundreds of academically struggling children who have been previously diagnosed with learning disorders — such as attention deficit hyperactivity disorder (ADHD), autism and dyslexia — and found that many of the reported learning difficulties did not match a general diagnosis.

They discovered this by supplying a computer algorithm with cognitive testing data from 550 children, which included measures of listening skills, spatial reasoning, problem-solving, vocabulary and memory.

After analyzing the data, the algorithm suggested that the children best fit into four clusters of difficulties, which aligned with other educational data and parental reports, but not with previous diagnosis.

By including children with all difficulties regardless of diagnosis, the algorithm can better capture the range of difficulties within, and which overlap between the diagnostic categories.

This allows researchers to understand that a general diagnosis is not the same for every student. For example, one student with ADHD may be experiencing learning in an entirely different way than another student with ADHD.

“Our work suggests that children who are finding the same subjects difficult could be struggling for very different reasons, which has important implications for selecting appropriate interventions,” Holmes said in a statement.

[divider]

Miyuki Hino, Elinor Benami & Nina Brooks

Graduate Students, Emmett Interdisciplinary Program on Environment and Resources, Stanford University

Government environmental regulators are often overworked and underfunded, causing massive environmental hazards to go undetected each year.

Seeing this as a huge problem, Stanford University graduate students Miyuki Hino, Elinor Benami and Nina Brooks turned to machine-learning technology for help.

Led by Hino, the student team focused on The Clean Water Act and trained computers to automatically detect patterns in data using information from past water facility inspections.

They deployed a series of models to predict the likelihood of failing an inspection based on facility characteristics, such as location, industry and inspection history. The computers then generated a risk score for each facility, indicating how likely it was to fail an inspection.

This allows environmental regulators to prioritize and predict hazardous violations.

“Especially in an era of decreasing budgets, identifying cost-effective ways to protect public health and the environment is critical,” Benami said in a statement.

Hino noted that machine learning has its drawbacks.

“Algorithms are imperfect, they can perpetuate bias at times and they can be gamed,” she said in a statement.

But, the team suggested remedies for these limitations and methods to integrate machine learning into enforcement efforts.

“This model is a starting point that could be augmented with greater detail on the costs and benefits of different inspections, violations and enforcement responses,” Brooks said in a statement.

[divider]

Narges Razavian

Assistant Professor in the Departments of Radiology and Population Health, NYU School of Medicine

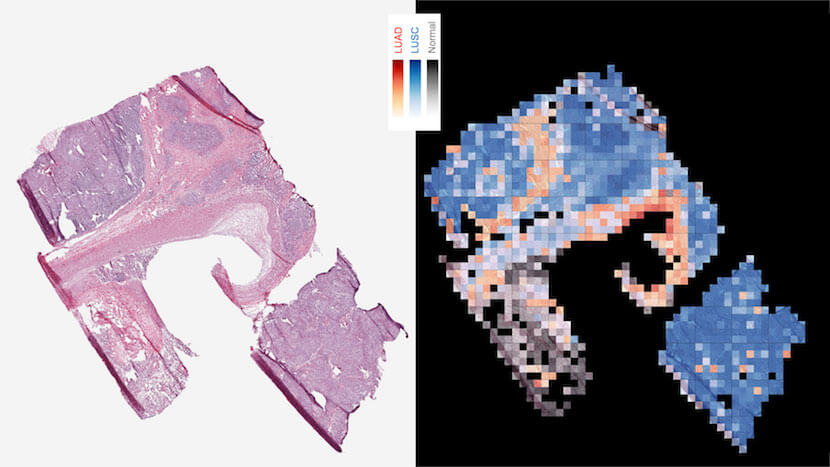

Identifying lung cancer types can be difficult for even experienced pathologists, but with the help of Narges Razavian and a machine-learning program, accuracies within the field can be improved.

Razavian and her research team developed an AI program that can distinguish with 97 percent accuracy between adenocarcinoma and squamous cell carcinoma — two of the more difficult cancer types to identify without confirmatory tests.

The program can also determine whether abnormal versions of six genes linked to lung cancer — including EGFR, KRAS and TP53 — are present in cells, with an accuracy that ranges between 73 and 86 percent, depending on the gene type.

Such genetic mutations are often attributed with causing abnormal growth, or providing visual clues for automated analysis. Unfortunately, current genetics tests used to confirm the presence of mutations can take weeks to return.

With this new AI approach, doctors can instantly determine cancer subtype and mutational status in order to get patients started on therapy sooner.

“In our study, we were excited to improve on pathologist-level accuracies, and to show that AI can discover previously unknown patterns in the visible features of cancer cells and the tissues around them,” Razavian said in a statement.

“The synergy between data and computational power is creating unprecedented opportunities to improve both the practice and the science of medicine.”

[divider]

Conclusion

Though AI developments may often feel like science-fiction, in reality, such incredible breakthroughs are happening at unprecedented, and often unimaginable, rates.

AI is aiding our world in nearly every field of science, and each of these women has contributed significant work to our increasingly technological world.